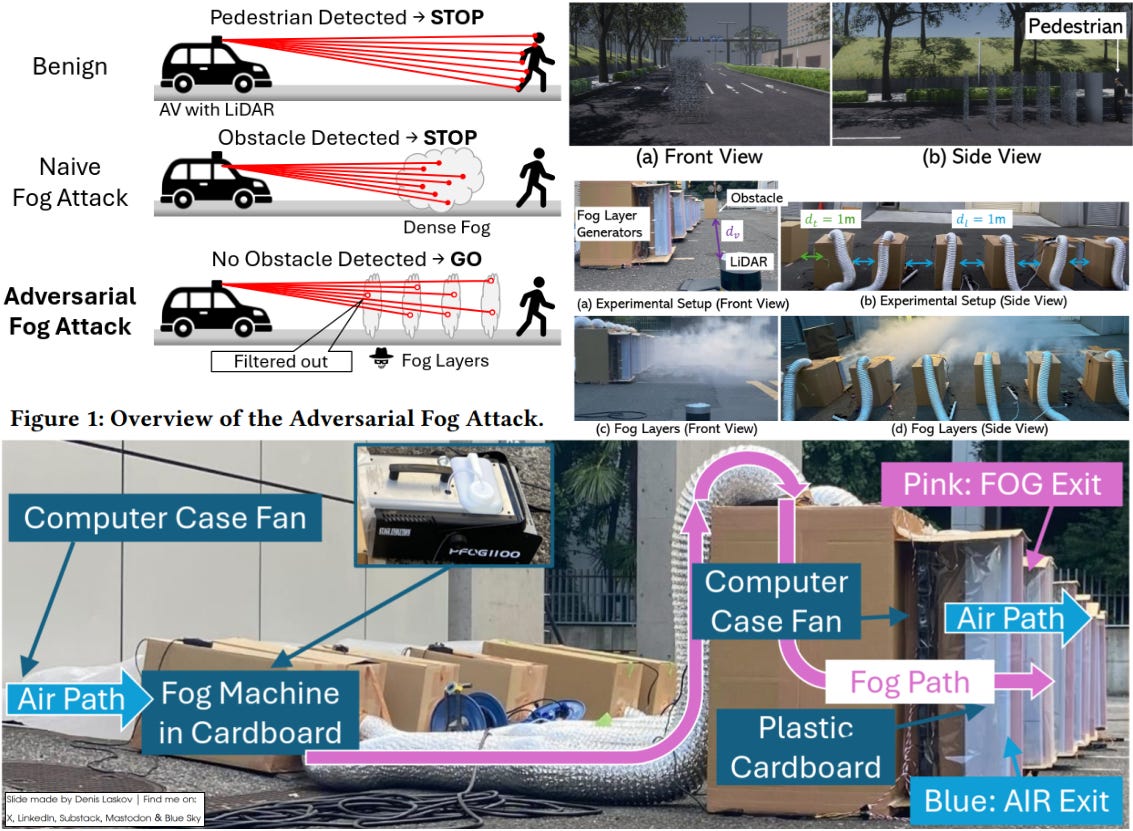

Hacking self-driving cars with a smoke machine: Adversarial Fog Attack (AFA) on modern LIDAR systems. 🚶♀️➡️💨🚗😱☠️

A group of security and automotive experts from Waseda University in Shinjuku, Japan, published research last month revealing a new class of vulnerabilities in density-based object detection methods used by LiDAR sensors.

Artificially generated fog can be used to trick LiDAR sensors in self-driving cars. By exploiting the way point cloud preprocessing filters within LiDAR systems remove “noise,” an attacker can cause real obstacles - such as pedestrians or cars - to be erased from the sensor data.

All you need to do is understand the preprocessing mechanism and generate the right amount of smoke. Wow!

Enjoy the read, and please share it with your colleagues and friends. And if you happen to have a smoke machine - keep it, just in case. :)

More details:

Adversarial Fog: Exploiting the Vulnerabilities of LiDAR Point Cloud Preprocessing Filters [PDF]: https://dl.acm.org/doi/10.1145/3708821.3733904